Note: The data of the sea area in the beginning of September: the water temperature is between 21 and 23 degrees, the salinity is about 32 thousand, the current is less than 2 knots, and the transparency is about 2 to 5 meters.

1 Competition background

1.1 Purpose and significance

In order to promote the development of the theory, technology and industry of agile robots for underwater robots, and to fill the gaps in the evaluation of underwater agile robots, this competition is closely related to the underwater robots' grasping tasks, aiming at underwater sea cucumbers, scallops and sea urchins. Set up a competition platform, design game details, and further promote the research of underwater target recognition detection algorithms and techniques. The competition takes the data as the core and constructs a closed-loop evaluation platform for the capture of agile robots under real offshore waters. It realizes the real-time rendering of underwater robots captured by virtual and real fusion, and integrates the objective evaluation model of referee subjective scoring and data self-learning.

1.2 Competition Organization Unit

The competition was hosted by the National Natural Science Foundation of China, and jointly organized by Dalian University of Technology and Zhangzidao Group Co., Ltd. The preparation for the competition was sponsored by Zhangzidao Group Co., Ltd. The competition guidance expert group consists of industry authorities from the National Natural Science Foundation of China, the Chinese Academy of Sciences, the University of Science and Technology of China, the Chinese University of Hong Kong, the Beijing University of Posts and Telecommunications, and the Dalian University of Technology. To ensure fairness and notarization, the expert jury Members do not participate in the game task review related to their own or the team, and adopt the principle of avoidance.

1.3 Competition grouping

1) The technology of target recognition group

2) The technology of fixed-point fetching group

3) The technology of autonomous grasp group

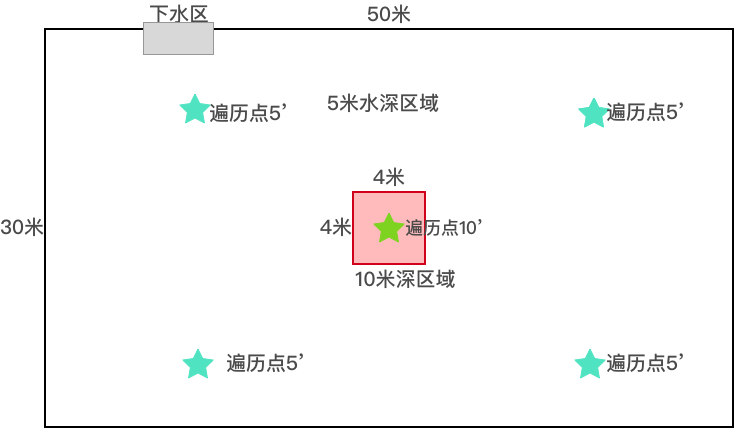

2 Match venue cage layout

Maritime university competition venue (pre-competition venue)

The traversal points are divided into two categories: four common traversal points (5 points each) and one deep water traversal point (10 points each).

The sea cucumbers, scallops and sea urchins used in the preliminary competition and self-capture are all models. The specific specifications are as follows:

Sea cucumber: length 11 , diameter 3.6

Scallop: height 12 , width 12 , thickness 3

Sea urchin: diameter without thorns 7 thorns up to 3 , bottom at the bottom (size: cm )

Weight :

Sea cucumbers are about 180 grams, sea urchins are about 190 grams, and scallops are about230 grams. They can all sink into the seabed through experiments.

Zhangzidao Sea Area Competition venue (final venue )

3 The technology of target recognition group

3.1 Offline test database and competition rules

1. Database overview

After decompressing the database archive, the folder structure is as follows:

train/

|--image/

|--box/

val/

|--image/

|--box/

test/

|--image/

devkit

|--data

|--evaluation

The training video is included in the train/image folder. Each video is divided into several video clips (numbered starting with 000). Image frames of the same video clip are saved in the same folder. For example: train/image/G0024172/002/0205.jpg is the 205th frame image of video G0024172, located in the 002th segment of the video. The corresponding label is located in the train/box/G0024172/002/0205.xml file. The annotation file contains the categories of all objects in the corresponding frame and the target frame position. The val/ and test/ folder structures are similar to train/.

Devkit/ contains code and data for evaluating the performance of the algorithm. The devkit/data/train.txt file is a list of all training set images. The format of each line is: "video_name/snippet_id/frame_name image id". The first item is the path of a training picture, and the second item is the id of the picture. For example: "YDXJ0013/044/4417 17573" The image referred to is train/image/YDXJ0013/044/4417.jpg with an id of 17573. Devkit/data/val.txt and test.txt are in the same format as train.txt, which is a list of all validation and test set images. Devkit/data/meta_data.mat contains information about the type of data. Imported in MATLAB can get the following array of structures.

synsets =

1x3 struct array with fields:

cls_id

name

Where cls_id is the category label (1, 2, 3) and name is the category name ("scallop", "seanurchin", "seacucumber"). Devkit/evaluation/VOCreadxml.m is a MATLAB program for reading xml annotation files.

2. Evaluation method

This competition refers to the evaluation method of ILSVRC2015 Object Detection task, and uses the average accuracy rate (mAP) as the evaluation index. (For details, please refer to http://image-net.org/challenges/LSVRC/2015/index#maincomp) given the target box true value BG and the target type true value CG, assuming that the target frame predicted by the algorithm is B, predicted The target type is C. The prediction is determined to be an accurate detection when C coincides with the CG and the overlap ratio (IOU) of the target frames BG and B is greater than a certain threshold. The overlap rate of the target box is calculated as:

IOU(B,BG) = (B intersection BG) / (B union BG)

For a true value target frame of size m*n, the threshold calculation method is

thr = min(0.5, m*n / [(m+10)*(n+10)])

Test results that do not satisfy the above conditions (inconsistent types or target frame overlap ratios below the threshold) will be judged as false detections. Repeated detection of the same target will also be judged as a false test.

3. Match test submission format

The predicted results of the entry algorithm will be submitted in a txt format file. Each line of the file corresponds to a detected target, in the following format:

<image_id> <class_id> <confidence> <xmin> <ymin> <xmax> <ymax>

Where image_id is the id number of the test image (listed in the devkit/data/test file), class_id is the type of object (see devkit/data/meta_data.mat), confidence is the confidence of the algorithm for this prediction, xmin ymin is The coordinates of the upper left corner of the target frame, and xmax ymax is the coordinates of the lower right corner of the target frame.

The MATLAB program for evaluating algorithms is located at devkit/evaluation/eval_detection.m. For ease of understanding, we provide the sample program devkit/evaluation/demo_eval_det.m. The program evaluates the prediction result file devkit/evaluation/demo_val_pred.txt on the val set.

4. Test environment

All teams will demonstrate on site and submit test results in the above format. Three PCs of the same configuration are provided for testing. The hardware and software configuration is as follows:

CPU: Intel i7-6700@3.4GHz

Memory:32GB

GPU: NVIDIA GeForce GTX 1080

Video memory: 8GB

Hard disk:2TB SSD

Operating system: Ubuntu 14.04 LTS, with CUDA-8.0 installed. For the rest of the software, please contact each competition team in advance to contact the competition organizing committee for installation.

3.2 Online test competition rules

1.Test environment

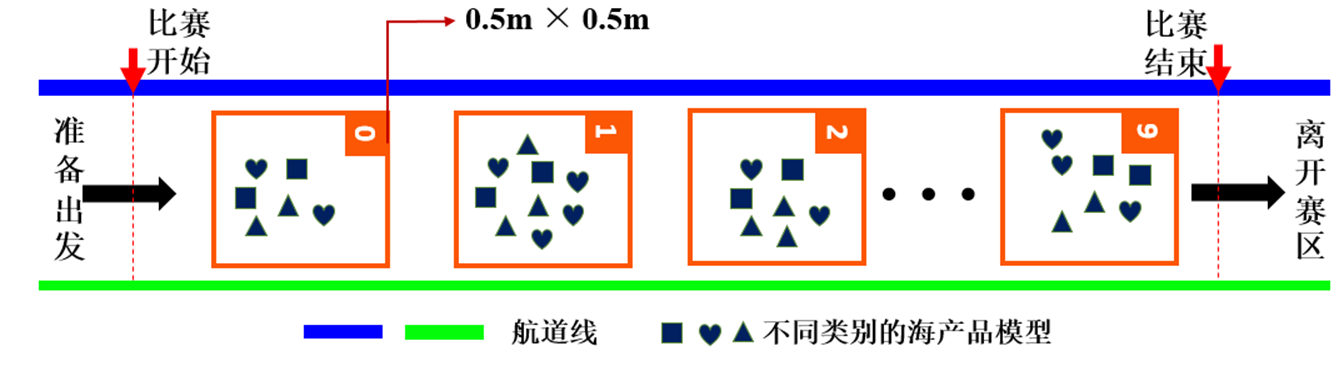

The online test environment consists of a 10m x 2m area. As shown in the figure below, no more than ten 0.8mx 0.8m detection areas are set in the 10m x 2m area. The target to be detected is randomly placed in the detection area.

Figure 1. Online test environment

2. Competition rules

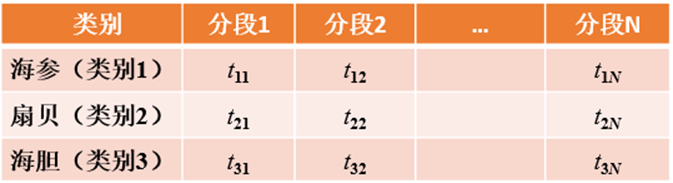

The competitors bring their own equipment, and the robot passes the detection area along the navigation line to complete the competition in a limited time. (Because the processor level in the self-contained robot is inconsistent, consider adjusting the time limit of different participants). Using the segmentation count, after the end of the detection process, each team submits the number of various types of targets detected in each detection zone, and the total number of detections of various types of targets in all detection zones. At the same time, the participating robots are required to save the test result pictures and submit them to the referee team after landing, which is used to evaluate the validity of the results (which means the team needs to feed back a test result screenshot for each test area).

Figure 2. Results are marked

The result submission format and labeling format are shown in the table below.

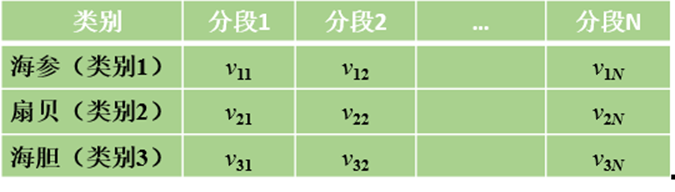

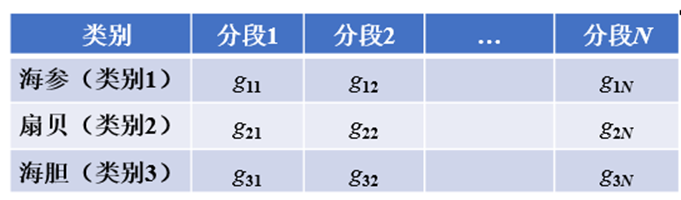

Table 1. Target number segment prediction value (submitted by the entrant)

Table 2. Target number segment predictions (host verification)

Table 3. Target number segmentation true value (organizer label)

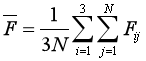

The rules for evaluation are as follows: the total accuracy is the criterion, and the segmentation count is the process reference. The game was completed within a limited time, and the timeout was judged as an unsuccessful recall. The referee team determines the validity of the final result by reviewing the video playback and segmentation results of the identification process. Sorting uses the principle of precision first. The following weighted average error form is adopted, and the smaller the error, the more accurate.

When the accuracy is exactly the same, the less time-consuming ones are ranked first.

4 The technology of fixed-point fetching group

(1) The robot must meet the following indicators

1) The volume of a single robot must not exceed 1.5m3 (body);

2) The robot can be a remotely controlled or cableless remote control robot;

3) Cableless remote control robots need to ensure that communication can be established underwater;

4) The robot must have a certain load capacity ( 10kg) , the robot and the net pocket can be separated, but the robot and the net bag must return to the designated position before the end of the game;

5) The robot must be grabbed and cannot be sucked;

6) Sensor: Unlimited, indicator score (value the ability).

(2) Competition requirements and rules

Judging criteria : Using the movement of the robot to achieve fixed-point crawling, comprehensive evaluation of the robot's mobile ability and grasping ability.

Competition rules :

1)Number of participants: Each team has a maximum of 6 participants (including 1 instructor). One of the participating players is the video center broadcaster of the main venue. The intercom and the crew members communicate with each other to broadcast the completion of the scheduled tasks. Walkie-talkie. For safety reasons, all participating players must wear life jackets (except for broadcasters), otherwise they will be disqualified.

2)Power consumption limit: All equipment of the team shall not exceed 1 kWh within 30 minutes. If the power limit is exceeded, deduction will be processed.

3)Competition time: 30 minutes for fixed-point arrest, 20 minutes for equipment commissioning and preparation time, and timeout points.

4)Venue: Before the start of the game, the robot needs to swim to the departure area of the underwater robot. The robot drills into the game cage by itself; after the game is over, the robot returns to the end position.

5)Divers assist: During the game, the diver is only dispatched when the robot is mechanically faulty and the robot is trapped underwater.

6)Event equipment: The organizer provides 220v generators and does not provide cranes.

7)Force majeure: In the event of extreme weather, accidents, generator failures, etc. during the course of the competition, it may be tentatively or re-timed by the referee group.

8)Competition discipline: In the course of the game, if there is abusive, violent, insulting referee, the referee group has the right to cancel the qualification of the team.

9)Termination of the game: During the course of the game, when the robot has a mechanical failure, the team can negotiate with the referee team to terminate the game or abandon the game.

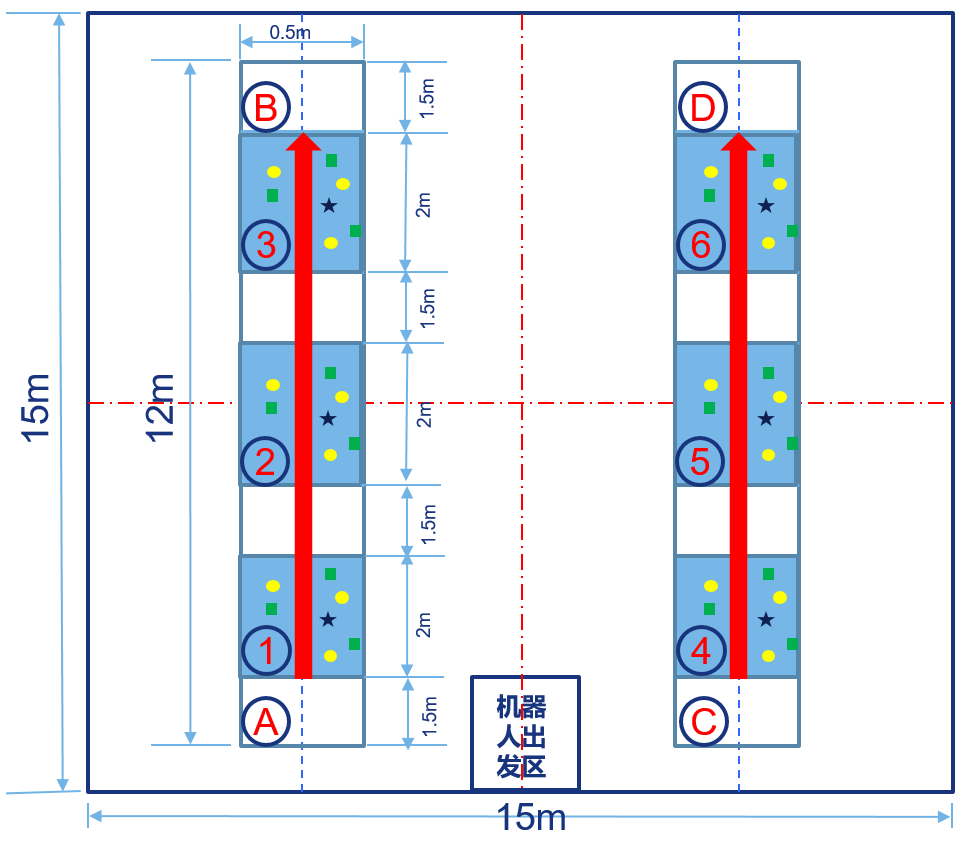

(3) Content of the game

4 rectangular areas in the pool (4 areas of about 0.5m × 2m, please refer to ①③④⑥ in the scene layout of the game in the scene of 15m × 15m. This area automatically recognizes whether the robot enters the area with electronic devices.) Different numbers of sea cucumbers, scallops and sea urchins are placed, and the robot moves and grabs seafood in the pool within a prescribed time. The more the number of grabs and the more types, the higher the score. The robot needs to return the captured seafood to the designated location on the shore before the end of the game. The number in the scoring rules is returned to the seafood at the designated location on the shore and the total quantity of seafood carried by the robot when it is finally discharged.

(4) Specific game parameters

Duration of the game : 30 minutes ;

Type : sea cucumber, scallop (empty scallop is invalid ) , sea urchin three categories.

(5) Calculation method of game score

A method of objective evaluation ( 70 %) combined with subjective evaluation ( 30 % ) .

Suppose robot volume A , by weight of B, gripping the number of sea cucumbers as H1, the number of scallops H2, sea urchins in an amount of H3, all teams crawling capacity single points ( A1 × Hl + A2 × H2 of A3 + × H3 of) scores the highest 's score normalization factor as H . 4 , wherein , the weighting factor A1 = 2 , A2 = 1, A3 =1 (cucumber weight is 2, sea urchins , and scallops a weight of 1) . Objective score calculation refer to the following table :

|

|

Evaluation parameter |

description |

Score |

|

|

objective comment |

Grab ability (50 points) (This item is only scored by the live referee) |

category |

Numbers of grasp |

|

|

Sea cucumber |

|

|||

|

Scallop |

|

|||

|

Sea urchin |

|

|||

|

Mobility (30 points) |

Autonomously traversing 4 points in 30 minutes, 30 points; 7.5 points per point. |

|

||

|

Subjective evaluation |

Product integrity (This item is only scored by the live referee) |

The integrity of the caught seefood.(10 points) |

|

|

|

Comprehensive evaluation |

Comprehensive evaluation of robot technology, robot movement, robot performance, etc.(10 points) |

|

||

(6) Special case description

1) If the robot fails and the game cannot be performed, the score will be considered as invalid.

2) Encourage independent research and development, reflecting independent technology.

3) Encourage low cost and reflect cost fairness.